Note: I have read this great book System Design Interview — An insider’s guide by Alex Xu in depth. So most of my definitions and images will be referred from this book itself as they are highly interactive and give us a clear picture of what is happening.

We will be focussing first on building a system that handles a single user and then gradually scaling it to serve millions of users. Though this article won't be in-depth but will give you all the concepts like a swiss multi-utility knife to further read in-depth and get a starting point for all your system design problems.

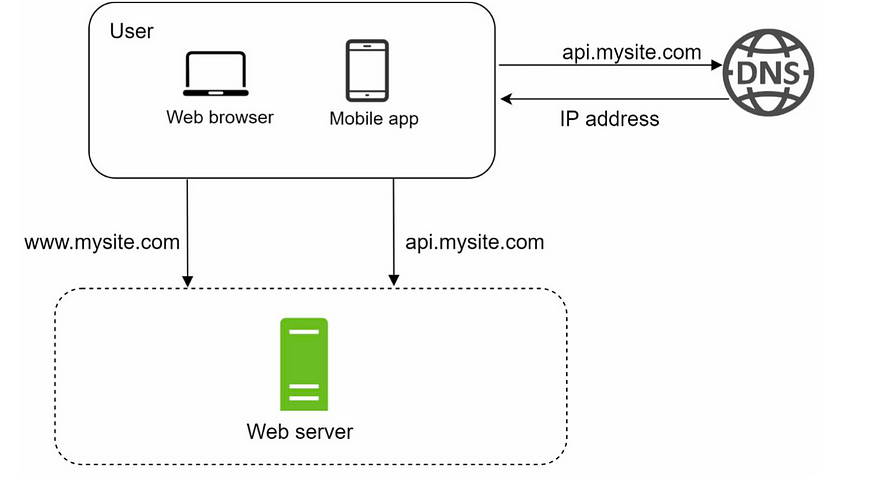

Let’s first look at this single server setup which can serve a single user easily:

What do we have here?

Web browser/Mobile app as a user, DNS, Web server and a few other things like domain name, IP address etc.

Requests will be coming from the User and our only Web server will be processing the request and serving the response.

We see a new keyword here, What is DNS?

Just like the phonebook stores the mapping of a person’s name to their phone numbers (excluding those people who can memorise contacts), we have DNS which stores the mapping of domain names to IP addresses.

The domain name system (DNS) is the Internet’s naming service that maps human-friendly domain names to machine-readable IP addresses. The service of DNS is transparent to users. When a user enters a domain name in the browser, the browser has to translate the domain name to IP address by asking the DNS infrastructure. Once the desired IP address is obtained, the user’s request is forwarded to the destination web server.

Now DNS is not a single server that does all this, it's a complete architecture in itself consisting of named servers, resource records (RR), caching, hierarchy, etc.

All these terminologies mentioned above are explained in detail here.

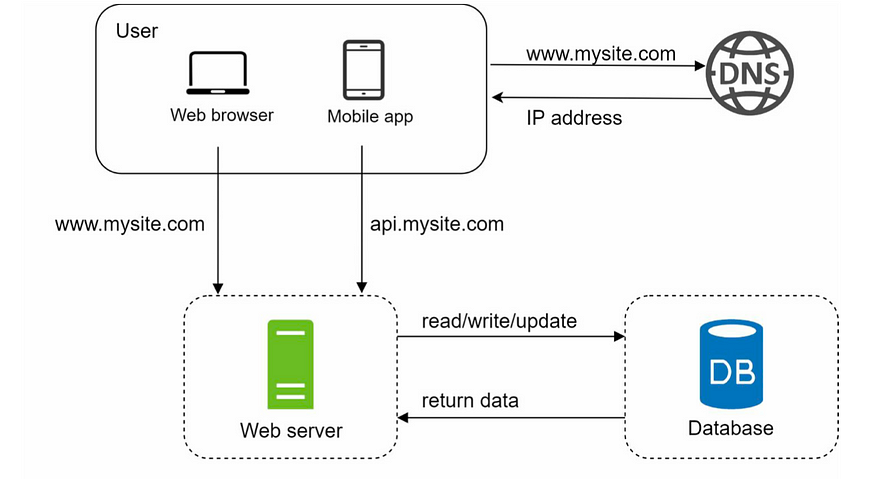

Let’s separate our design into web tier and data tier to scale our system a bit and to be able to serve more users.

Our web servers will do all the processing, but our data is persisted in the database. We can perform CRUD (Create, Read, Update, Delete) operations on the database and further process our data before sending back the response in HTML, JSON formats etc.

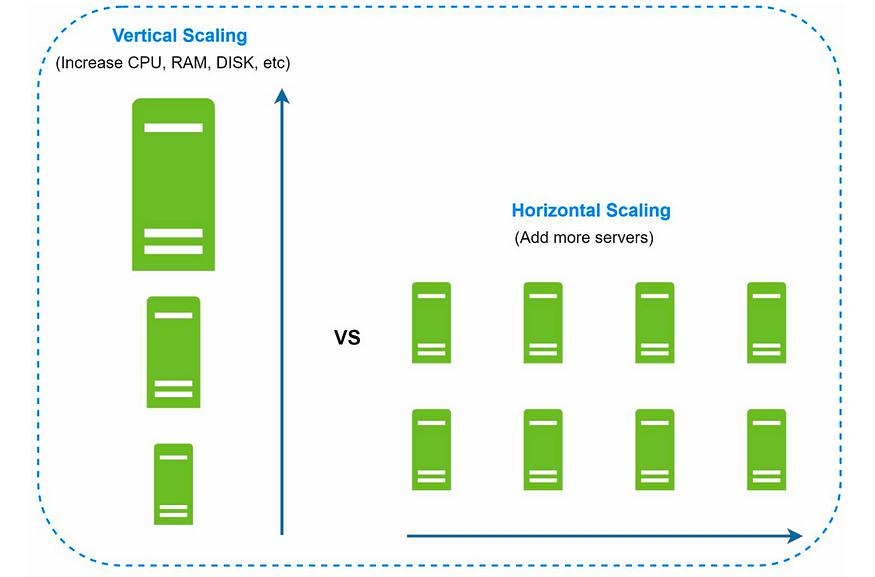

Vertical vs Horizontal Scaling

Vertical scaling, referred to as “scale up”, means the process of adding more power (CPU, RAM, etc.) to your servers. Horizontal scaling, referred to as “scale-out”, allows you to scale by adding more servers into your pool of resources.

Vertical scaling is easier to do but isn’t cost-effective. Also, there is SPOF (Single Point Of Failure) as there is only 1 server present with very high power. If it goes down whole application goes down.

In another scenario, if many users access the web server simultaneously and it reaches the web server’s load limit, users generally experience slower responses or fail to connect to the server.

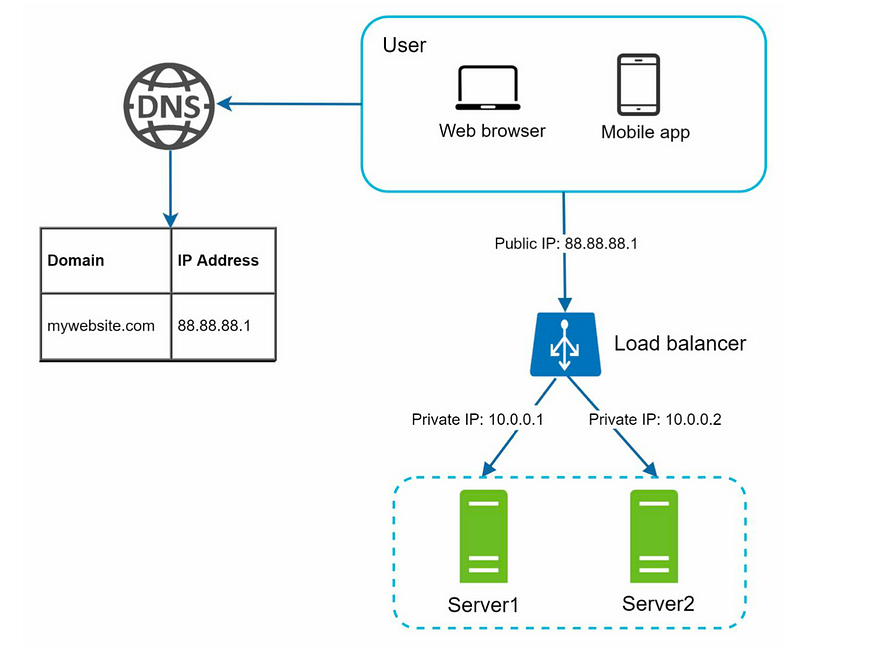

To understand Horizontal scaling, let’s first understand the Load Balancer.

Load Balancer

A load balancer evenly distributes incoming traffic among web servers that are defined in a load-balanced set. The user connects to the public IP of the load balancer which further connects with the private IP of our defined servers.

Now at this point, we have handled issues of SPOF and single server overload with horizontal scaling + Load Balancer.

- If server 1 goes offline, all the traffic will be routed to server 2. This prevents the website from going offline. We will also add a new healthy web server to the server pool to balance the load.

- If the website traffic grows rapidly, and two servers are not enough to handle the traffic, the load balancer can handle this problem gracefully. You only need to add more servers to the web server pool, and the load balancer automatically starts to send requests to them.

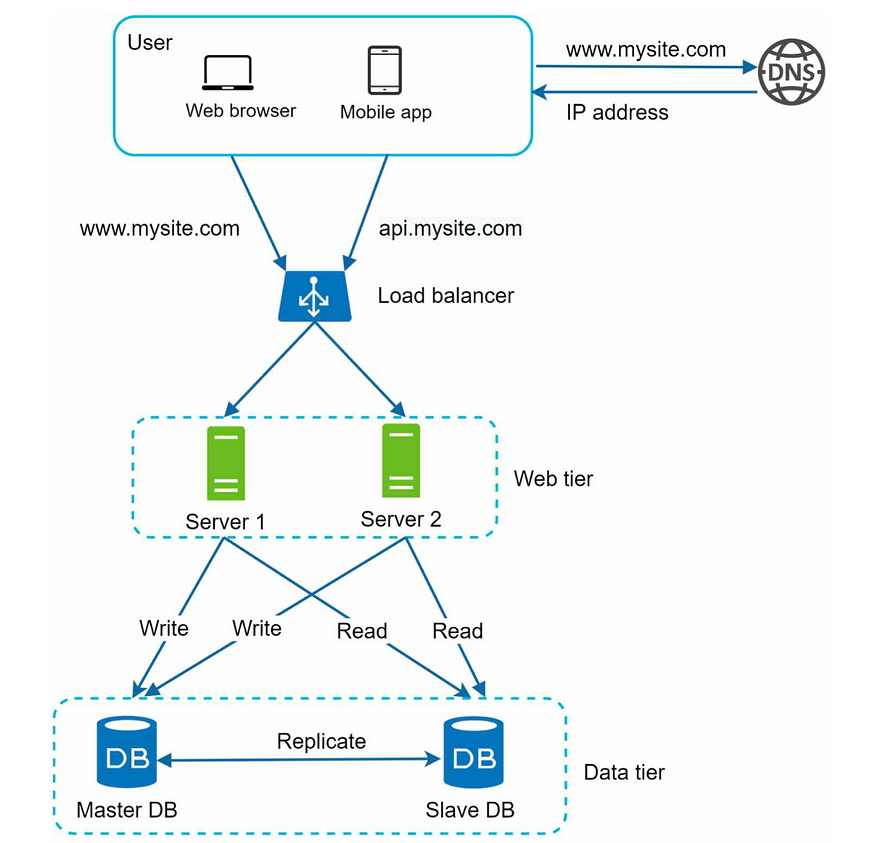

The Web Tier looks fine now. Let’s handle the Data Tier now.

We only have 1 database server as of now, so again issues like SPOF can come up.

To handle it we can follow the Data Replication strategy.

Wikipedia: “Database replication can be used in many database management systems, usually with a master/slave relationship between the original (master) and the copies (slaves)”.

A master database generally only supports write operations. A slave database gets copies of the data from the master database and only supports read operations.

Most applications require a much higher ratio of reads to writes; thus, the number of slave databases in a system is usually larger than the number of master databases.

With this architecture we can achieve below things:

- Better performance: In the master-slave model, all writes and updates happen in master nodes; whereas, read operations are distributed across slave nodes. This model improves performance because it allows more queries to be processed in parallel.

- Reliability: If one of your database servers is destroyed by a natural disaster, such as a typhoon or an earthquake, data is still preserved. You do not need to worry about data loss because data is replicated across multiple locations.

- Availability: To make the system highly available for read operations, if we only have 1 slave database available and that too goes offline, we can temporarily shift the read operations to the master database itself. In case of other slave database nodes are present, we can redirect the operations to other healthy slave databases.

If the master database goes offline, a slave database will be promoted to be the new master. All the database operations will be temporarily executed on the new master database. A new slave database will replace the old one for data replication immediately. In production systems, promoting a new master is more complicated as the data in a slave database might not be up to date. The missing data needs to be updated by running data recovery scripts.

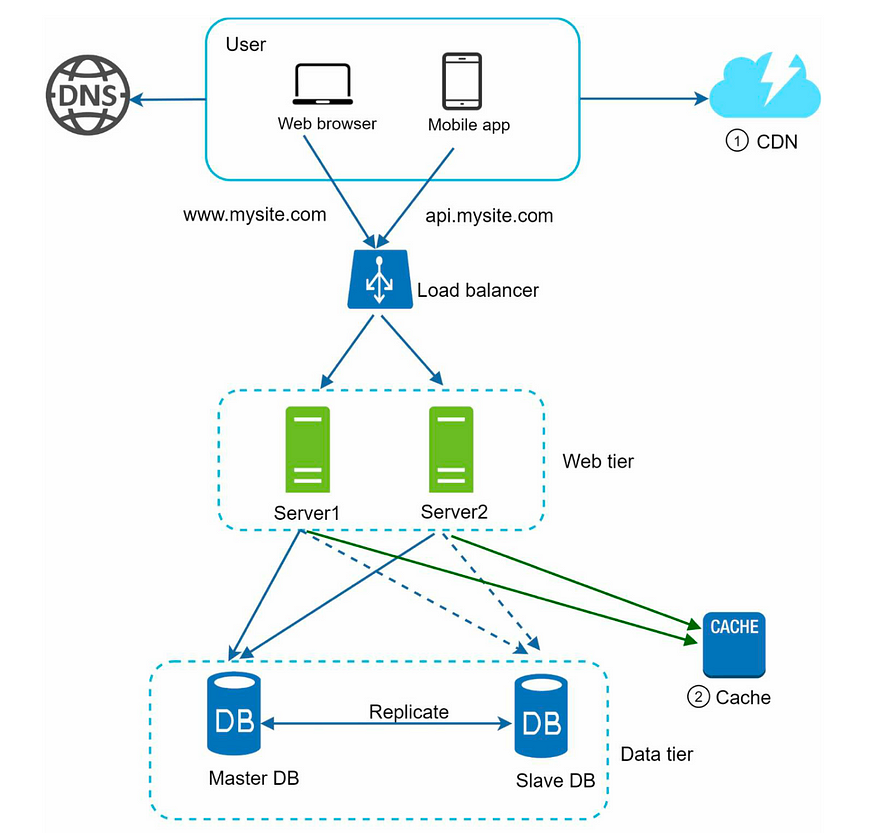

After all the above discussions, our system design:

Now, we have a basic understanding of the web and data tiers, it is time to improve the load/response time.

We can use caching and CDN for this.

Caching

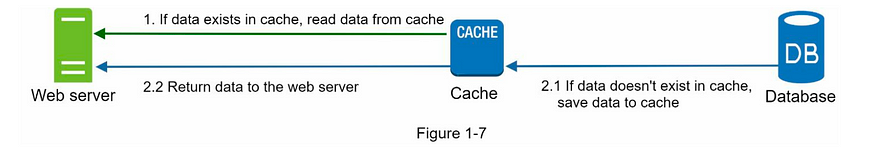

A cache is a temporary storage area that stores the result of expensive responses or frequently accessed data in memory so that subsequent requests are served more quickly. The application performance is greatly affected by calling the database repeatedly. The cache can help in solving this problem.

Once we get the request, the web server checks if the data is present in the cache. If it is present (also called cache-hit), then our cache access it and directly sends the data to the client. If data is not present in our cache (also called cache-miss), it queries the database then stores the response in the cache and sends data back to the client.

CDN

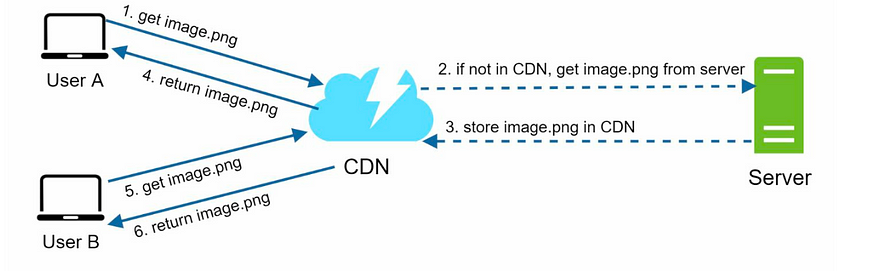

A CDN is a network of geographically dispersed servers used to deliver static content. CDN servers cache static content like images, videos, CSS, JavaScript files, etc.

Note: There is also a concept of dynamic content caching which enables the caching of HTML pages that are based on request path, query strings, cookies, and request headers.

Workflow of CDN:

To read more about CDN you can read through this article or watch this CDN Simplified video by Gaurav Sen

Now let’s add cache and CDN to our existing system design:

Stateless Architecture

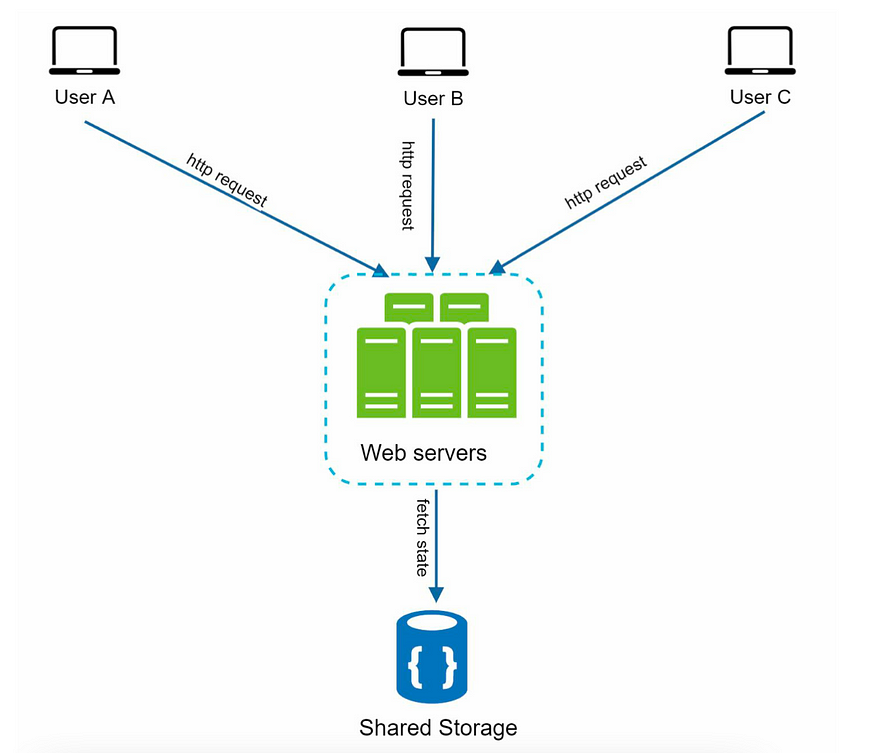

Currently, our architecture maintains the session state of the user in the server itself — Stateful Architecture. So to authenticate a particular user, all its requests should go to its mapped server which stores its state.

This adds an overhead on the server, also adding and removing servers becomes difficult in case of changing traffic.

To decouple things further, we need to move to a Stateless Architecture, where any server can take the user request and process it further, hence stateless.

In this stateless architecture, HTTP requests from users can be sent to any web server, which fetches state data from a shared data store. State data is stored in a shared data store and kept out of web servers. A stateless system is simpler, more robust, and scalable.

Also, adding and removing servers can be done based on traffic load as we removed the state data from our web servers.

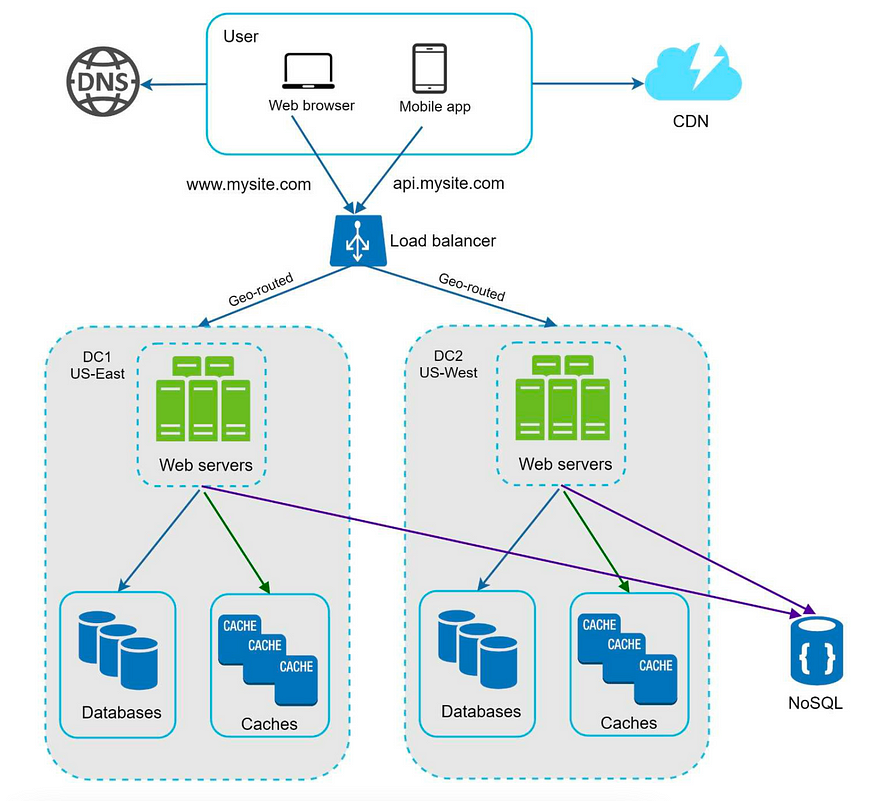

Data Center

Our application can get users from multiple geographic locations. To improve availability and provide a better user experience across wider geographical areas, supporting multiple data centers is crucial.

Let’s look at dealing with 2 geographic locations which use the concept of GeoDNS-routing:

GeoDNS uses the IP address of the user from whom the DNS request is received, identifies the IP location, and serves a unique response according to the country or region of the user.

Also, in the event of any significant data center outage, we direct all traffic to a healthy data center.

As per our updated design below, if any one of the data center goes completely offline say DC1 US-East, we redirect 100% of its traffic to the other healthy data center say DC2 US-West. So, our SPOF is handled.

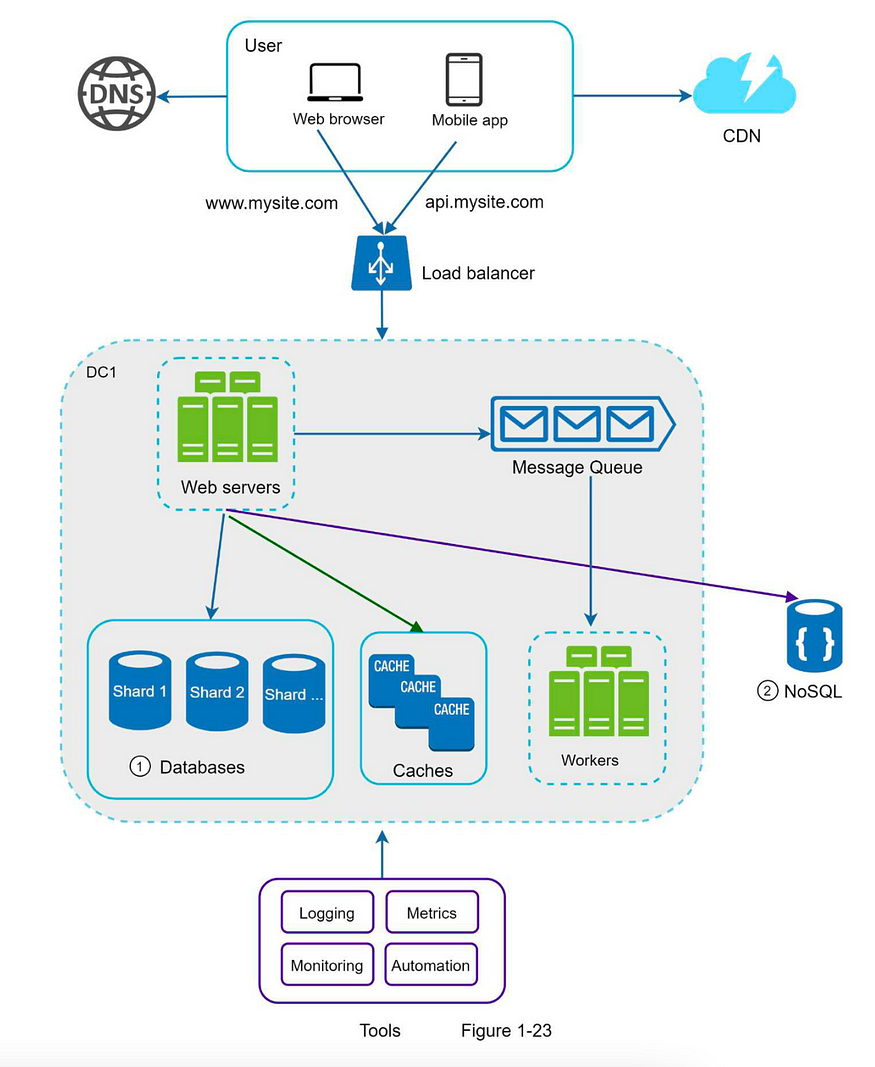

To further scale our system, let's try to decouple a few more components in our current design.

Messaging Queue

Message queuing makes it possible for applications to communicate asynchronously, by sending messages to each other via a queue. A message queue provides temporary storage between the sender and the receiver so that the sender can keep operating without interruption when the destination program is busy or not connected. Asynchronous processing allows a task to call a service, and move on to the next task while the service processes the request at its own pace.

Another way to define Messaging Queue: A message queue is a durable component, stored in memory, that supports asynchronous communication. It serves as a buffer and distributes asynchronous requests. The basic architecture of a message queue is simple. Input services, called producers/publishers, create messages and publish them to a message queue. Other services or servers, called consumers/subscribers, connect to the queue, and perform actions defined by the messages.

With MQ, a producer can publish messages to the queue and move on to do some other tasks as consumer may or may not be available to consume and process it at that particular time. Our consumer once available can read messages from the queue and then process them.

One good example will be an application that supports photo customization, including cropping, sharpening, blurring, etc. Those customization tasks take time to complete. Web servers publish photo processing jobs to the message queue. Photo processing workers pick up jobs from the message queue and asynchronously perform photo customization tasks.

Here the producer and the consumer can be scaled independently. When the size of the queue becomes large, more workers are added to reduce the processing time. However, if the queue is empty most of the time, the number of workers can be reduced.

To read more on this, check out this amazing article — A Dummy’s Guide To Distributed Queues by freeCodeCamp

Database Scaling

Database Scaling can also be done in 2 ways: Vertical and Horizontal scaling

Vertical scaling, also known as scaling up, is the scaling by adding more power (CPU, RAM, DISK, etc.) to an existing machine. We can generally get highly powerful database servers say around 24 TB of RAM, these kinds of servers can store and handle lots and lots of data.

But it comes with few limitations: Restricted user base, higher risk of SPOF, and vertical scaling becomes highly expensive as you go higher to such powerful database servers.

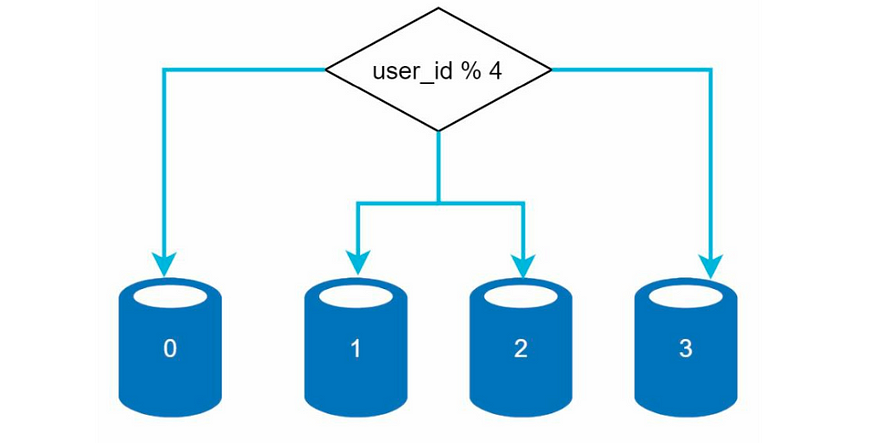

Horizontal scaling, also known as sharding is the practice of adding more servers

Sharding separates large databases into smaller, more easily managed parts called shards. Each shard shares the same schema, though the actual data on each shard is unique to the shard.

User data is allocated to a database server based on a particular sharding key. Anytime you access data, a hash function is used to find the corresponding shard.

In our example, user_id % 4 is used as the hash function. If the result equals 0, shard 0 is used to store and fetch data. If the result equals 1, shard 1 is used and so on…

Sharding is a good enough strategy but it has quite a few limitations:

- Resharding data: Resharding data is needed when 1) a single shard could no longer hold more data due to rapid growth. 2) Certain shards might experience shard exhaustion faster than others due to uneven data distribution. When shard exhaustion happens, it requires updating the sharding function and moving data around. Consistent Hashing is used to resolve this, which needs an article on its own.

To read about this, kindly check out this amazing article — A Guide To Consistent Hashing - Celebrity problem: Excessive access to a specific shard could cause server overload. Imagine data for Messi, CR7, and Neymar all end up on the same shard. For social applications, that shard will be overwhelmed with read operations. To solve this problem, we may need to allocate a shard for each celebrity. Each shard might even require further partition.

Logging, metrics, automation

Monitoring error logs is important because it helps to identify errors and problems in the system as our system and daily active users (DAU) grow.

Metrics: Collecting different types of metrics help us to gain business insights and understand the health status of the system.

When a system gets big and complex, we need to build or leverage automation tools to improve productivity. Each code check-in can be passed through some default checks and verified through automation, allowing teams to detect problems early. Besides, automating your build, test, deploy process, etc. could improve developer productivity significantly.

With all these things in place our final system design:

For a short pointer summary, try out the system design — scaling from zero to million users text on ChatGPT. I tried it and it gave a decent response with 7 points to keep in mind while thinking of a system design.

0 comments:

Post a Comment

Note: only a member of this blog may post a comment.